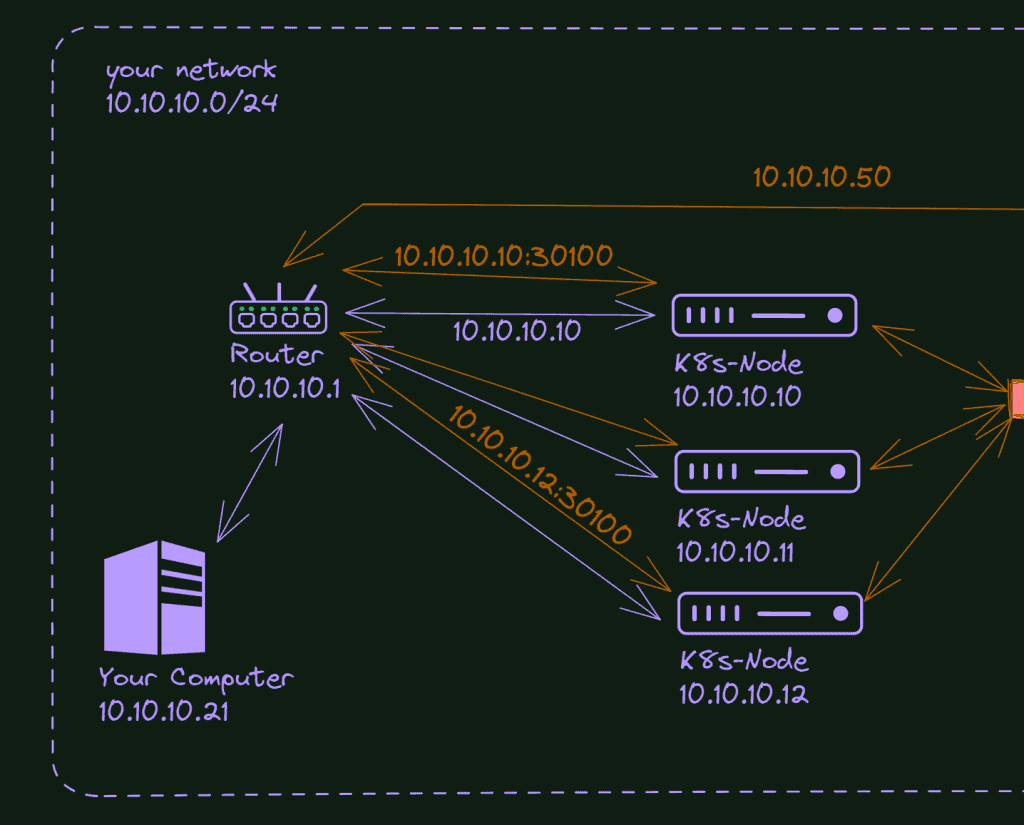

Kubernetes is huge, and sooner than later, you perceive yourself doing this question: How much more should I explore this technology? What path to follow? It depends on the market of your region and your custom goals. I will help you clarify it today with a custom list of Kubernetes Features, their importance (in general), and the resources or paths you should follow on Kubernetes Learning.

Core Components

Begin by exploring the core components of Kubernetes, including:

- Docker: In the context of Kubernetes, Docker plays a significant role as the most widely used containerization platform. Docker enables developers to package applications and their dependencies into containers, providing consistency and portability across different environments.

- Deployments: Declarative configurations that manage the deployment and scaling of application containers across clusters.

- Kubectl: kubectl is a command-line interface (CLI) tool used to interact with Kubernetes clusters. It is a primary means through which administrators and developers manage and control Kubernetes resources.

- Pods: The smallest and most basic deployable units in Kubernetes, representing a single running process instance.

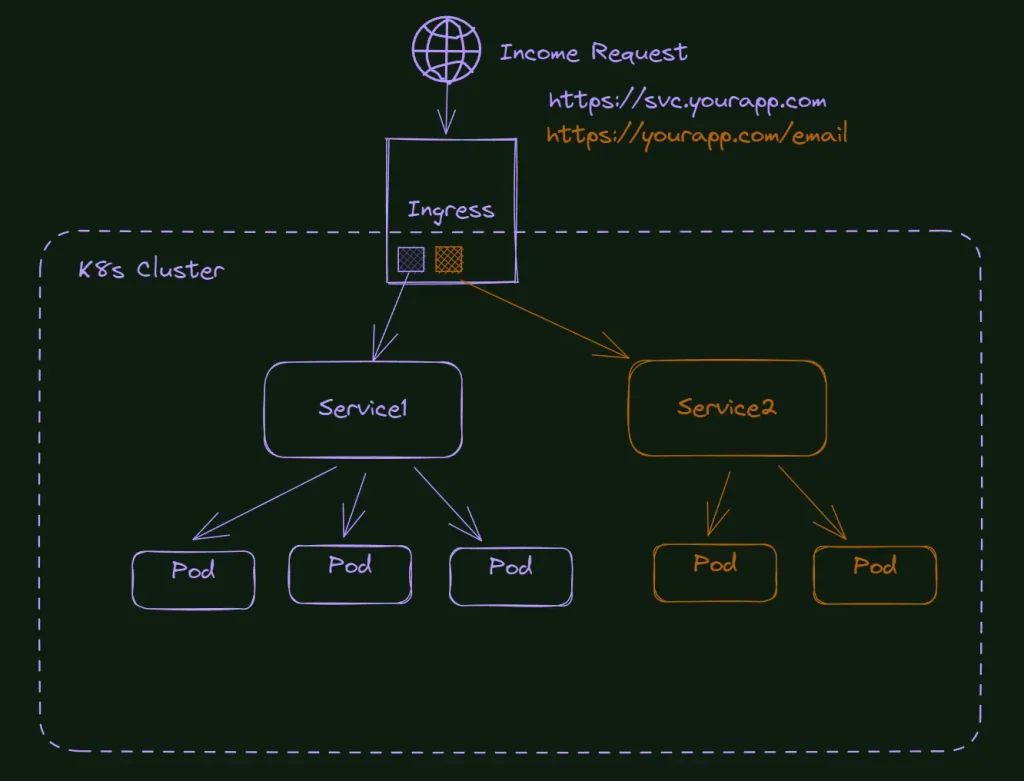

- Services: Abstracted network endpoints that enable communication between different parts of an application, both within and outside the cluster.

It would be best if you didn’t start working with Kubernetes without knowing about these five subjects. It works even for developers; more and more, we have companies delegating the docker files to developers, and to make sure your setup is working, you need knowledge on these subjects.

If you don’t know that, don’t worry, you have material about it here in this blog. You can start with Why is Kubernetes so difficult? And how to learn it fast.

The good news is that with this knowledge, you already qualify for some positions requiring K8s, especially those for developers. Preferably you should enter the second layer, but the lack of this knowledge will not hold you to start on the mentioned positions.

The Second Layer

The second layer is more if you are a professional connected with DevOps and plan to advance with this career. If you are a network engineer working with server maintenance willing to increase your skills in cloud or Kubernetes, the second layer also applies to you as the most basic knowledge.

Secrets are Kubernetes resources that store sensitive information securely, such as API keys, passwords, and TLS certificates. They enable applications to access confidential data without exposing it directly within the container definition or configuration files. Secrets can be created manually or generated automatically by Kubernetes.

- Creating Secrets: Secrets can be created using the

kubectlcommand-line tool or defined within a YAML manifest file. The secret data is base64-encoded to protect it during transmission and storage. - Consuming Secrets: Applications can access secrets through environment variables or as files mounted in the container’s file system. The Kubernetes runtime handles the decryption of secrets and securely makes them available to the application.

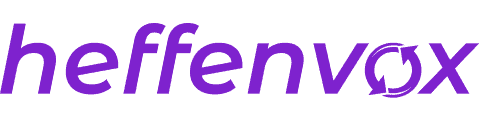

Ingress is a Kubernetes resource that acts as an entry point or reverse proxy for HTTP and HTTPS traffic into the cluster. It provides a way to expose services externally, allowing external clients to access the services running inside the cluster. You can see a more detailed explanation in the article What is Kubernetes Ingress, and How it works?

- Routing and Load Balancing: Ingress routes incoming requests to the appropriate service based on specified rules and host or path-based routing. It can also distribute the traffic among multiple service instances using load-balancing algorithms.

- TLS Termination: Ingress supports terminating TLS (Transport Layer Security) encryption, allowing secure communication between clients and the cluster. TLS certificates can be provided through secrets or automatically managed using the Kubernetes certificate management system.

In a Kubernetes environment, certificates are essential for securing communication between different components, ensuring authenticity, and enabling encrypted connections. I wrote a deep and practical article: Managing SSL in your K8s Homelab: Professional Grade.

- Self-Signed Certificates: Kubernetes can generate self-signed certificates automatically, which are suitable for development and testing purposes. However, self-signed certificates are not trusted by default in production environments.

- External Certificate Authorities (CA): In production, it’s common to use certificates signed by trusted external CAs. These certificates are used for securing ingress traffic, inter-cluster communication, and other secure connections within the cluster.

- Certificate Management: Kubernetes provides tools like cert-manager, which automate the management of certificates within the cluster, including certificate generation, renewal, and rotation.

Volumes in Kubernetes provide a way to store and persist data beyond the lifetime of a container. They decouple data storage from the container’s lifecycle, allowing data to be preserved even if a container is restarted or rescheduled.

- Persistent Volumes (PV): PVs are cluster-level resources representing network-attached storage in the cluster. They are provisioned and managed by the cluster administrator and can be dynamically or statically provisioned.

- Persistent Volume Claims (PVC): PVCs are requests made by users or applications for storage resources from PVs. They allow users to claim and use available storage without concern about its underlying details.

- Volume Types: Kubernetes supports various types of volumes, including hostPath, emptyDir, NFS, AWS EBS, Azure Disk, and more. Each volume type provides different capabilities and functionalities, depending on the storage infrastructure used.

If you are interested in exploring the use of NFS in Kubernetes, I wrote about it here: How to use NFS in Kubernetes Cluster: Step By Step.

By utilizing secrets, ingress, certificates, and volumes, Kubernetes enables secure communication, data persistence, and efficient routing of traffic within the cluster. These components are vital in building resilient, secure, and scalable applications in a Kubernetes environment.

Going deeper

Besides these two first layers, the knowledge starts to become more specific. Some companies could ask you to research and implement a GitOps strategy in Kubernetes or use Helm charts extensively during daily activities. You only need to get down to this third level if you have these specific demands or want to administrate your cluster. Here is a quick overview of these topics:

Helm

Helm is a Kubernetes package manager that simplifies application deployment and management. It allows users to define application resources and their dependencies as charts, which are versioned packages. Helm charts consist of templates, values files, and optional helper scripts, enabling users to parameterize and customize deployments. Helm streamlines the installation, upgrading, and removal of complex applications, making it easier to manage applications as they evolve.

Cluster Autoscaling

Cluster autoscaling is a Kubernetes feature that dynamically adjusts the number of worker nodes in a cluster based on workload demands. By monitoring resource utilization and pending workloads, cluster autoscaling automatically scales the cluster up or down to optimize resource allocation. This ensures efficient utilization of resources while maintaining high availability and performance for applications.

ConfigMap

ConfigMap is a Kubernetes resource used to store configuration data in key-value pairs. It provides a way to decouple application configuration from container images, enabling easier configuration management. ConfigMaps can be mounted as volumes or exposed as environment variables within containers, allowing applications to access configuration data at runtime. They commonly store environment-specific configurations, such as database connection strings, API endpoints, and feature toggles.

Custom Resource Definitions (CRD)

Custom Resource Definitions (CRDs) enable users to define their resource types and extend the Kubernetes API. CRDs allow for the creation of custom resources that are treated similarly to built-in Kubernetes resources. This extension mechanism empowers users to model and manage complex applications and services within Kubernetes using familiar Kubernetes concepts and tooling.

Role-Based Access Control (RBAC)

Role-Based Access Control (RBAC) is a security mechanism in Kubernetes that defines and manages access permissions within the cluster. RBAC allows cluster administrators to grant fine-grained access control to users or groups, ensuring that only authorized entities can perform specific actions on resources. RBAC enables the segregation of duties, improves security, and controls who can create, modify, or delete resources within the cluster.

GitOps

GitOps is an operational framework that leverages Git as the single source of truth for defining and managing infrastructure and application configurations. In the GitOps approach, Kubernetes manifests, and other configuration files are version controlled and stored in a Git repository. Changes to the configuration trigger a continuous deployment process, where the desired state of the Kubernetes cluster is reconciled with the state described in the Git repository. This ensures declarative and auditable infrastructure and application management, achieving consistency and reproducibility in Kubernetes deployments.

By utilizing Helm, cluster autoscaling, ConfigMap, CRD, RBAC, and GitOps practices, Kubernetes users can enhance application deployment, configuration management, security, and infrastructure automation. These tools and concepts contribute to streamlined operations, improved scalability, and increased agility in Kubernetes environments.

Advanced topics

The last layer in my priority list is for those with specific requests or tasks in the company or those interested in achieving certification.

Network Policies:

Network policies are Kubernetes resources that allow users to define and enforce network rules within a cluster. Network policies enable fine-grained control over network traffic, allowing administrators to specify ingress and egress rules based on source and destination IP addresses, ports, and protocols. By leveraging network policies, administrators can isolate and secure applications within the cluster, providing enhanced network-level security.

Service Mesh

A service mesh is an infrastructure layer that provides advanced networking capabilities, observability, and security features for microservices running in Kubernetes clusters. It employs a sidecar proxy pattern, where a dedicated proxy, such as Envoy or Istio’s sidecar proxy, is deployed alongside each microservice. The service mesh handles service-to-service communication, traffic routing, load balancing, and implementing features like circuit-breaking, retries, and distributed tracing, simplifying service communication and improving resilience and observability.

Operators

Operators are Kubernetes controllers that extend the platform’s functionality by automating complex application management tasks. Operators encapsulate domain-specific knowledge and best practices for deploying, configuring, and managing applications on Kubernetes. They leverage Custom Resource Definitions (CRDs) to define and manage custom resources encapsulating application-specific logic. Operators automate tasks like application provisioning, scaling, upgrades, and ongoing lifecycle management, reducing operational complexities and improving the overall reliability of applications running on Kubernetes.

Node Hardening

Node hardening means implementing security measures to protect the worker nodes within a Kubernetes cluster. This involves applying security configurations, such as disabling unnecessary services, minimizing attack surfaces, enabling firewalls, enforcing access controls, and regularly patching the operating system and container runtime. Node hardening practices enhance the security posture of the cluster by reducing the risk of unauthorized access, data breaches, and other security threats.

Bleeding Edge

The term “bleeding edge” refers to adopting the latest cutting-edge technologies and software versions. In the context of Kubernetes, bleeding edge typically implies using the latest releases, features, and enhancements. While adopting bleeding-edge technologies can offer access to the newest functionalities and innovations, it also carries potential risks, such as instability, compatibility issues, and limited community support.

Organizations considering the bleeding edge should carefully evaluate the benefits and potential drawbacks before adopting such technologies in production environments.

Image Scanning

Image scanning is a security practice that involves analyzing container images for known vulnerabilities and potential risks before deployment. Image scanning tools scan the container images and their dependencies, comparing them against security vulnerability databases and known vulnerabilities. By detecting and flagging vulnerable components, image scanning enables organizations to proactively address security concerns and mitigate the risks of running containers with outdated or insecure dependencies.

By leveraging network policies, service mesh, operators, node hardening, bleeding edge technologies, and image scanning practices, organizations can enhance the security, reliability, and performance of their Kubernetes deployments. These practices create robust and secure containerized environments while ensuring the smooth operation of applications running on Kubernetes clusters.

Conclusion

This list is far from the definitive and complete list of Kubernetes resources. Still, I believe this is more than enough for anyone to make good use of Kubernetes and work in most companies in the market today.

From my experience, most companies do not even touch on some subjects like node-hardening or custom operators.

I hope this article clarified this hard knowledge path, and remember: continuous learning, staying updated with the latest advancements, and considering individual requirements and goals are essential to successfully navigating the dynamic landscape of Kubernetes.