Welcome back! We’re thrilled to continue our exploration of cost management in this next article. If you’ve been following along, we’ve already covered some crucial errors to avoid and valuable insights on optimizing cloud costs. Now, let’s dive into the remaining key considerations for effective cost management.

This article pick up from where we left off, starting with Item 7: “Introducing harmful risks to the organization.” We’ll discuss the potential risks and their impact on your organization’s value proposition, including cybersecurity, supply chain, and talent management. Understanding these risks and implementing proactive measures will be instrumental in ensuring long-term success.

Beyond that, we’ll also touch on Item 8, which explores the importance of unit cost metrics and their role in making informed cost-cutting decisions. Additionally, we’ll delve into Item 9, addressing the significance of provisioning optimized volumes and the potential cost implications of not doing so.

Lastly, we’ll conclude our discussion with Item 10, emphasizing the importance of paying only for the cloud services you use and providing practical tips for optimizing your service selection.

So, join us as we continue our journey through the intricacies of cost management. We’re excited to share more insights, strategies, and real-world examples that will help you become a master of cost optimization in your cloud environment.

Stay tuned for the upcoming sections, and thank you for being part of this learning experience. Let’s dive in and uncover more valuable insights together!

Seventh: Introducing harmful risks to the organization

Imagine a ship captain who decides to sail through treacherous waters known for their unpredictable storms and hidden obstacles to save on fuel costs. While this may provide short-term savings, it puts the ship, crew, and cargo at significant risk. Similarly, cost-cutting actions disregarding potential risks can jeopardize the organization’s value proposition and long-term success.

This error highlights the importance of considering the risks associated with cost-cutting actions. While reducing costs is important, evaluating whether these actions introduce or increase chances that could negatively impact the organization’s value proposition is essential. Cybersecurity, supply chain, and talent risks can have far-reaching consequences that outweigh the short-term cost savings.

Under budget pressure, executives typically look first to lower costs in their immediate area of responsibility, such as their function. Still, it’s critical to consider, too, whether those cost-reduction actions would create or exacerbate risks that threaten the organization’s value proposition:

- Cybersecurity: Neglecting investment in cybersecurity may lower IT costs in the short term, but it significantly increases the risk of major cybersecurity incidents such as ransomware attacks or headline-making data breaches. These incidents can severely affect shareholders, customers, and partners, leading to reputational damage and financial losses. Managing the aftermath of a cybersecurity incident itself incurs substantial costs.

- Supply chain: Reducing inventory levels across the entire product portfolio may temporarily boost the organization’s cash position. However, it can negatively impact supply chain performance, jeopardizing customer service levels, especially for products that generate significant value for the organization. The erosion of supply chain efficiency can lead to delayed deliveries, stockouts, and dissatisfied customers.

- Talent: Implementing cost-cutting initiatives and reducing investments in talent can harm the employee experience, which is crucial for fostering employee engagement and productivity. Failing to recognize the impact of such decisions can result in long-term damage to key talent outcomes. This is particularly critical in today’s competitive landscape, where highly skilled talent is scarce, and hiring and retaining top talent can be costly.

Organizations can make informed decisions that balance short-term cost savings with the business’s long-term value, security, and sustainability by understanding the potential risks associated with underinvesting in cybersecurity, reducing inventory levels, and cutting back on talent investments.

In Google Cloud, organizations should prioritize cybersecurity and invest in robust security measures, such as implementing multi-factor authentication, data encryption, and regular security audits. While cost-cutting may tempt reducing cybersecurity investments, the potential risk of a major cybersecurity incident, like a data breach, far outweighs the short-term cost savings.

In AWS, organizations can avoid introducing harmful risks by maintaining appropriate inventory levels across the supply chain. While reducing inventory levels may provide short-term cost savings, it can compromise supply chain performance and customer service levels, particularly for items that generate significant value for the organization. Implementing supply chain optimization tools like Amazon Forecast can help balance cost reduction and supply chain effectiveness.

In Azure, organizations should prioritize employee experience and avoid rash decisions that damage key talent outcomes. While cost-cutting initiatives may seem necessary, it is crucial to understand the impact on employee engagement and productivity. By investing in employee development programs, creating a positive work environment, and fostering a culture of innovation, organizations can retain top talent and mitigate long-term risks associated with talent shortages.

Organizations can make informed decisions when considering potential risks and their impact when implementing cost-cutting measures. Assessing the potential risks in areas such as cybersecurity, supply chain, and talent allows organizations to balance cost optimization and preserving the organization’s value proposition. This ensures that short-term cost savings maintain the long-term success and sustainability of the organization.

How to avoid it?

To avoid introducing harmful risks to the organization while implementing cost-cutting measures, you can follow these steps:

- Conduct a comprehensive risk assessment: Before implementing cost-cutting actions, conduct a thorough risk assessment to identify potential risks associated with each area of cost reduction. Consider cybersecurity, supply chain, talent, compliance, and operational resilience. Evaluate the potential impact and likelihood of these risks materializing.

- Prioritize risk mitigation: Once risks are identified, prioritize their comfort based on their potential impact on the organization’s value proposition. Allocate resources and efforts to address high-priority risks first, ensuring that risk mitigation measures are aligned with the organization’s overall strategy and objectives.

- Maintain cybersecurity measures: Do not compromise cybersecurity investments, even under budget pressures. Continuously invest in robust cybersecurity measures to protect against potential cyber threats and data breaches. Implement multi-factor authentication, encryption, regular security audits, and employee awareness programs to minimize the risk of cybersecurity incidents.

- Optimize supply chain performance: While reducing costs in the supply chain, carefully evaluate the impact on supply chain performance and customer service levels. Balance cost reductions with maintaining adequate inventory levels to meet customer demands and avoid disruptions. Implement supply chain optimization tools, advanced analytics, and demand forecasting to enhance efficiency without compromising service levels.

- Prioritize employee experience: Recognize that employee experience is critical for employee engagement and productivity. Avoid rash decisions that damage employee experience and long-term talent outcomes. Invest in employee development programs, create a positive work environment, and foster a culture that values innovation and collaboration.

- Establish risk-aware decision-making processes: Incorporate risk assessment and mitigation considerations into the decision-making processes related to cost-cutting initiatives. Ensure that key stakeholders, including the CFO, CIO, and risk management teams, evaluate the potential risks and make informed decisions.

- Continuously monitor and reassess risks: Regularly monitor and reassess the identified risks to stay proactive in mitigating potential threats. Keep up-to-date with emerging risks, industry trends, and regulatory changes that may impact the organization. Adapt risk mitigation strategies as needed to ensure ongoing protection and value preservation.

- Foster collaboration across functions: Encourage cross-functional collaboration between finance, IT, risk management, and other relevant departments. This collaboration enables a holistic approach to cost-cutting decisions, considering financial objectives and potential organizational risks.

- Engage with external experts: Seek advice and insights from external experts, consultants, or industry peers specializing in risk management and cost optimization. Their expertise can provide valuable perspectives and help identify potential risks that might be overlooked internally.

- Communicate and educate: Ensure clear communication and education about the importance of risk management and the potential risks associated with cost-cutting actions. Create awareness among stakeholders about the organization’s risk appetite and the need to balance cost reduction with risk mitigation for long-term success.

By following these steps, organizations can effectively manage risks associated with cost-cutting initiatives. This approach enables organizations to make informed decisions that balance cost optimization with risk mitigation, preserving the organization’s value proposition and long-term sustainability.

Eighth: Stop unhealthy growth

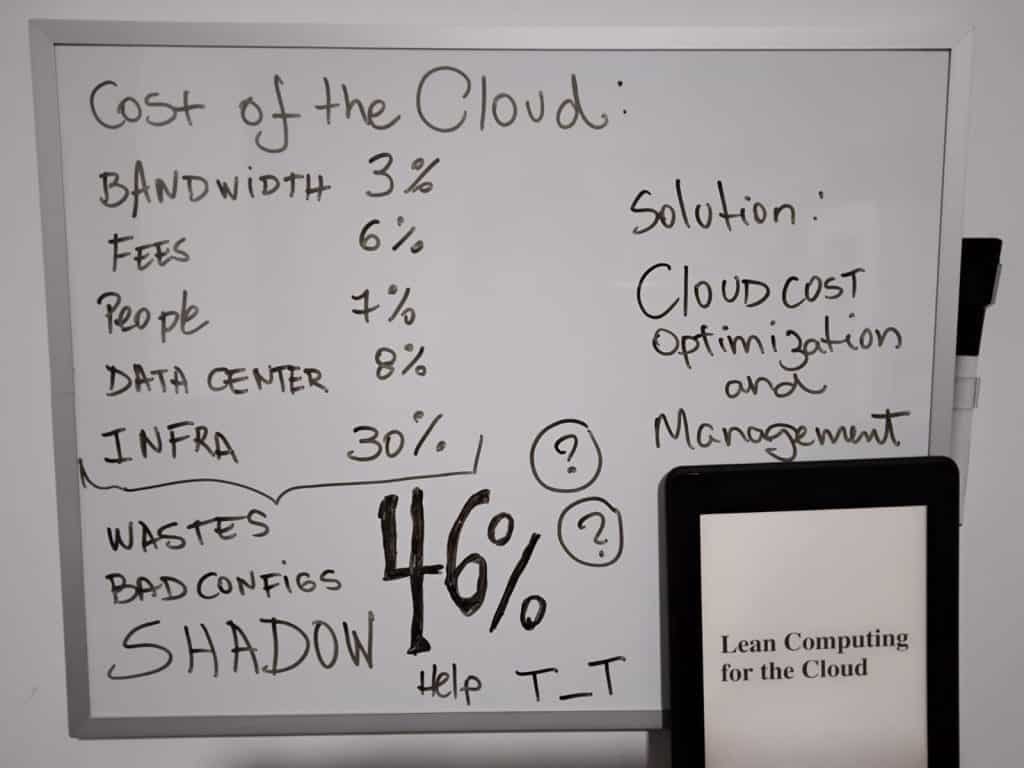

Imagine a garden where some plants thrive due to proper care, sunlight, and water, while others are overgrown, neglected, and hindering the garden’s overall health. Similarly, in cloud cost management, healthy growth represents optimized resource allocation and efficient consumption practices. In contrast, unhealthy growth stems from wasteful resource provisioning, neglecting to shut down unused instances, and a lack of financial controls.

This error emphasizes the importance of distinguishing between healthy and unhealthy cloud costs. While some cost increases are associated with positive factors like business growth, others stem from wasteful practices or poor governance. Companies should establish effective tagging and reporting capabilities to ensure transparency and optimize cloud spend, implement allocation models that promote accountability, and introduce financial controls to track and manage budgets.

Cloud cost increases can reflect healthy growth, such as growth in the user base, increased digital adoption, and the development of new digital capabilities. However, these cost increases can hide “unhealthy” growth resulting from poor resource management, inefficient provisioning, or immature consumption practices. This lack of clarity between healthy and unhealthy costs can be detrimental, especially during economic uncertainty when companies must adjust their cloud spend.

Companies can establish a consistent tagging and reporting capability in Google Cloud by leveraging Google Cloud Resource Manager and Cloud Billing APIs. By applying tags to resources and using tools like Google Cloud Monitoring and Google Cloud Logging, organizations can gain a comprehensive view of their cloud spend and identify areas of unhealthy growth. They can also set up allocation models that allocate costs to specific business units or projects, promoting accountability and awareness of cloud costs.

In AWS, organizations can leverage AWS Cost Explorer and AWS Budgets to gain insights into their cloud spend and identify areas of unhealthy growth. Implementing tagging strategies using AWS Resource Groups and cost allocation tags enables detailed reporting and cost allocation to specific business units or teams. AWS Cost Anomaly Detection can help identify unusual spending patterns and potential areas for optimization.

In Azure, companies can utilize Azure Cost Management and Azure Monitor to gain visibility into their cloud spend. Organizations can enforce tagging standards and generate comprehensive reports by applying tags and using Azure Policy. Azure Cost Management budgets and alerts can be set up to monitor spending trends and identify areas of unhealthy growth. Azure Advisor provides recommendations for cost optimization and resource utilization.

By setting up a consistent tagging and reporting capability, allocating costs to business units, and implementing financial controls, companies can effectively manage their cloud costs. This ensures that cloud spend aligns with business priorities and critical use cases while promoting accountability and transparency. Regular monitoring, optimization, and awareness of cloud costs enable healthy growth and resource optimization within the cloud environment.

How to avoid it?

To avoid the risks associated with underinvesting in cybersecurity, reducing inventory levels, and cutting back on talent investments, you can follow these steps:

- Prioritize cybersecurity investments: Allocate adequate resources and budget to establish a robust cybersecurity program. Conduct regular risk assessments, implement security best practices, and leverage advanced security technologies to protect your organization’s data and systems. Stay updated on emerging threats and invest in employee training to promote a strong cybersecurity culture.

- Maintain optimal inventory levels: Conduct thorough demand forecasting and analysis to determine the appropriate inventory levels for your products. Strive for a balance between optimizing cash flow and ensuring timely fulfillment of customer orders. Implement inventory management systems and processes that enable efficient tracking, replenishment, and demand planning to prevent stockouts and minimize supply chain disruptions.

- Invest in talent development and retention: Recognize the value of your employees and invest in their growth and development. Provide training opportunities, mentorship programs, and competitive compensation packages to attract and retain top talent. Foster a positive work culture that encourages employee engagement, collaboration, and innovation. Regularly assess and address employee satisfaction and concerns to maintain a motivated and productive workforce.

- Establish risk management practices: Develop and implement risk management frameworks encompassing cybersecurity, supply chain, and talent management. Identify potential risks, assess their potential impact, and develop mitigation strategies. Regularly review and update risk management practices to address evolving threats and challenges.

- Foster cross-functional collaboration: Encourage collaboration between departments and stakeholders to ensure that decisions regarding cybersecurity, supply chain management, and talent investments are made with a holistic view of the organization. Promote open communication and knowledge-sharing to facilitate informed decision-making and avoid siloed approaches that overlook potential risks.

- Continuously monitor and assess: Regularly monitor and assess the effectiveness of your cybersecurity measures, inventory management processes, and talent development initiatives. Implement performance metrics, conduct regular audits, and analyze data to identify areas for improvement and make informed decisions. Stay updated on industry trends and best practices to adapt your strategies accordingly.

- Seek external expertise: To gain additional insights and expertise, consider engaging external consultants, cybersecurity firms, or supply chain experts. They can provide objective assessments, identify vulnerabilities, and recommend strengthening your cybersecurity, supply chain, and talent management practices.

By following these steps, you can mitigate the risks associated with underinvesting in cybersecurity, reducing inventory levels, and neglecting talent investments. Taking a proactive and holistic approach to these areas ensures your organization’s long-term resilience, security, and growth while balancing cost optimization and risk management.

Ninth: Focus on simple fixes

We consistently discover numerous cost and performance optimization opportunities when assisting organizations with their cloud programs. The encouraging news is that these improvements can be realized through relatively straightforward actions. The most common “no-regret” steps include releasing unused capacity, implementing scheduling and auto-scaling features, and aligning service levels with specific application requirements. For instance, organizations can switch from memory-optimized to standard instances or leverage a serverless compute engine for containers instead of managing their cluster. Companies must prioritize actions that offer maximum benefits and swiftly deploy them across teams and cloud users. Quick feedback loops are essential to evaluate the success of these measures; if a lever proves effective for one application or team, it can be scaled to others.

For example, a major public-sector agency achieved approximately 20 percent savings by optimizing cloud services to align with application needs, eliminating unused assets, implementing storage tiering guidelines, and updating instances to the latest versions.

Once an initial optimization level is achieved, sustaining the results requires training technologists on cloud best practices. This enables them to understand the necessary actions for cost reduction and empowers them to implement these measures. Additionally, it is important to mandate continuous scanning by the FinOps team for new cost-reduction opportunities and to track the outcomes of optimization efforts.

This excerpt highlights the potential for cost and performance optimization in cloud environments and emphasizes the simplicity of the steps required to achieve these improvements. The analogy of hidden treasures and unlocking their value illustrates the idea that valuable opportunities for optimization exist within the cloud, and organizations can attain significant benefits by taking advantage of them.

In Google Cloud, an organization using GCP can release unused capacity by utilizing tools such as Google Cloud’s instance usage recommendations or rightsizing recommendations provided by tools like Google Cloud’s Cost Optimization tool. They can introduce scheduling and auto-scaling features using Google Kubernetes Engine’s horizontal pod autoscaling or Cloud Scheduler. By aligning service levels to specific application requirements, they can optimize their resources by switching to appropriate instance types or leveraging serverless solutions like Cloud Functions.

In AWS, an organization can release unused capacity by utilizing AWS EC2 Instance Scheduler or leveraging AWS Lambda for automated resource shutdown. They can introduce auto-scaling using AWS Auto Scaling or AWS Elastic Kubernetes Service (EKS). By aligning service levels to specific application requirements, they can optimize their resources by utilizing AWS Cost Explorer to identify underutilized instances and AWS Trusted Advisor for cost optimization recommendations.

Similarly, in Azure, an organization can release unused capacity using Azure Automation or Azure Functions for automated resource management. They can introduce auto-scaling using Azure Autoscale or Azure Virtual Machine Scale Sets. By aligning service levels to specific application requirements, they can optimize their resources by utilizing Azure Cost Management and Azure Advisor for cost optimization recommendations.

By applying these steps and leveraging the respective tools and features provided by Google Cloud, AWS, and Azure, organizations can unlock the hidden potential within their cloud environments, optimize costs, and enhance performance. The analogy of hidden treasures encourages organizations to explore and take advantage of these opportunities, ultimately maximizing the value they derive from their cloud programs.

How to avoid it?

To avoid the pitfalls mentioned in the previous text, here are steps you can take:

- Conduct a comprehensive assessment: Evaluate your current cloud usage and identify areas where cost and performance optimization opportunities may exist. This assessment should include an analysis of resource utilization, application requirements, and spending patterns.

- Release unused capacity: Identify resources that are no longer needed or underutilized and release them. This could involve shutting down idle instances, terminating unused storage volumes, or decommissioning redundant services. Regularly review your cloud environment to ensure you only pay for resources actively contributing value.

- Implement scheduling and auto-scaling: Leverage your cloud provider’s scheduling and auto-scaling features to adjust resource allocation based on demand dynamically. Schedule non-critical workloads during off-peak hours and automatically scale resources up or down based on predefined thresholds. This ensures optimal resource utilization and cost efficiency.

- Align service levels with application requirements: Review your application workloads and select the appropriate service levels and instance types that match their specific requirements. For example, choose memory-optimized instances for memory-intensive applications and standard instances for general workloads. Leverage serverless compute options for containerized applications to reduce management overhead.

- Leverage cost optimization tools and resources: Cloud providers like Google Cloud, AWS, and Azure offer a variety of cost optimization tools and resources. Familiarize yourself with these tools, such as Google Cloud’s Cost Explorer, AWS Cost Explorer, or Azure Cost Management, to gain visibility into your cloud spending. Utilize your cloud provider’s cost optimization recommendations and best practices to identify further cost-saving opportunities.

- Foster a culture of continuous improvement: Educate your teams on cloud cost optimization best practices and encourage them to identify opportunities to optimize costs and improve performance proactively. Establish feedback loops to gather insights from teams successfully implementing cost optimization measures and share these learnings across the organization.

- Regularly monitor and track optimization efforts: Continuously monitor your cloud costs and performance metrics to track the impact of your optimization initiatives. Leverage your cloud provider’s reporting and analytics capabilities to gain insights into cost trends and identify areas for further improvement. Review and refine your optimization strategies based on the data and feedback received.

- Seek external expertise if needed: If you require additional support or expertise, consider engaging with cloud cost optimization specialists or consultants. They can provide guidance tailored to your specific cloud environment and help you implement effective optimization strategies.

You can avoid the earlier pitfalls by following these steps and maintaining a proactive approach to cloud cost and performance optimization. Continuously monitoring and optimizing your cloud environment will help you achieve better cost efficiency and performance and ensure that your cloud spending aligns with your business objectives.

Ninth: Unlock cloud elasticity to stop paying for unused cloud capacity

Imagine your cloud infrastructure as a house with adjustable walls. The walls represent the capacity of your resources, and the ability to resize them represents cloud elasticity. In theory, this elasticity allows you to expand or shrink your house according to your immediate needs, resulting in lower costs because you only pay for the space you use. However, many companies need help effectively utilizing this elasticity, similar to having rigid walls that cannot easily adjust in size.

For example, a global telecom operator encountered this issue due to manually adjusting the walls of their house-shaped cloud infrastructure. While they increased capacity in response to traffic surges, they rarely decreased it when demand decreased. This becomes problematic during a recession when customer demand weakens, and businesses reduce promotions or offer discounts, leading to decreased traffic and cloud usage.

Visualizing the cloud infrastructure as a house with adjustable walls makes it easier to understand the concept of cloud elasticity. Refactoring applications and utilizing autoscaling features is like adjusting the walls to match the number of occupants in the house, optimizing resource utilization, and reducing unnecessary costs.

In theory, cloud elasticity allows companies to scale their resources up and down to match their current needs, potentially reducing costs by paying only for what is used. However, many companies need help to effectively utilize this elasticity due to various practices that hinder its benefits. These include rigid and manual provisioning practices, technical debt preventing the implementation of elasticity features, and overreliance on reserved instances. Consequently, companies end up paying for cloud capacity they must fully utilize.

To address this challenge, companies should collaborate with their engineering teams to identify applications and workloads that lack elasticity, often “lifted and shifted” to the cloud without optimization. These applications should be refactored, starting with those with the largest footprint. Implementing standard autoscaling features can be relatively simple and provide immediate benefits. Investments in advanced capabilities like containerization can be explored for even more efficient elasticity, but careful consideration is necessary as they may require additional time and effort. Going forward, companies should avoid a “lift and shift” approach in future migrations unless there are strategic reasons, such as data center exits.

Companies can fully leverage cloud elasticity by addressing inelastic applications, implementing autoscaling features, and adopting optimized migration strategies. This allows them to scale their resources dynamically, aligning with real-time needs, optimizing costs, and ensuring efficient cloud utilization.

An organization using Google Cloud can address this issue by identifying inelastic applications and workloads that were “lifted and shifted” from on-premises to the cloud without optimization. Refactoring these applications, starting with those with the largest footprint, can improve elasticity. Google Cloud offers autoscaling features, such as Google Kubernetes Engine’s Horizontal Pod Autoscaler, that automatically adjust resources based on demand. By leveraging these capabilities, companies can achieve more efficient elasticity and cost optimization.

In AWS, companies can work with their engineering teams to identify and refactor inelastic applications. AWS provides autoscaling capabilities like AWS Auto Scaling and Amazon Elastic Kubernetes Service (EKS) that automatically adjust resources to match demand. By utilizing these features and optimizing application architectures, companies can make better use of cloud elasticity and avoid paying for underutilized resources.

Similarly, in Azure, organizations can collaborate with their engineering teams to identify and refactor inelastic applications. Azure offers autoscaling options such as Azure Autoscale and Azure Virtual Machine Scale Sets, which automatically adjust resource capacity. By leveraging these features and optimizing application designs, companies can optimize their cloud usage and reduce unnecessary costs.

Organizations can fully exploit cloud elasticity by working closely with engineering teams, identifying inelastic applications, and refactoring them to leverage autoscaling capabilities. This enables them to dynamically scale resources based on demand, optimize costs, and avoid overprovisioning or underutilization. The analogy of an elastic band emphasizes the adaptability of cloud resources, highlighting the importance of optimizing their usage across different cloud providers.

How to avoid it?

To avoid the challenges associated with underutilizing cloud elasticity, you can follow these steps:

- Evaluate application requirements: Assess your applications and workloads to determine their specific scalability needs. Identify applications that were “lifted and shifted” to the cloud without optimization and prioritize them for refactoring. This evaluation helps you understand the elasticity requirements of your applications.

- Refactor applications for elasticity: Collaborate with your engineering teams to refactor applications and optimize them for cloud elasticity. This process involves redesigning and rearchitecting applications to leverage native scaling capabilities provided by your cloud provider. This could include containerization, microservices architecture, and serverless computing.

- Implement autoscaling: Leverage autoscaling features provided by your cloud provider to adjust resources based on demand automatically. Configure autoscaling policies and thresholds that align with your application’s scalability requirements. This ensures that your resources expand or contract dynamically to match workload fluctuations, optimizing resource usage and cost efficiency.

- Utilize cloud-native services: Take advantage of cloud-native services and managed offerings that inherently support elasticity. For example, use serverless computing platforms like Google Cloud Functions, AWS Lambda, or Azure Functions, which automatically scale based on request volume. Leveraging these services reduces the burden of manual scalability management.

- Monitor and optimize resource utilization: Regularly monitor the utilization of your cloud resources and analyze performance metrics. Utilize cloud provider tools such as Google Cloud Monitoring, AWS CloudWatch, or Azure Monitor to gain insights into resource usage patterns. Identify underutilized resources and take corrective actions, such as rightsizing instances or adjusting autoscaling policies, to optimize resource utilization.

- Continuous testing and validation: Implement robust testing and validation processes to ensure the refactored applications and autoscaling configurations perform as expected. Test different load scenarios to verify that the elasticity mechanisms respond appropriately and effectively scale resources based on demand.

- Embrace a cloud-native mindset: Encourage a cloud-native mindset within your organization, promoting using cloud-native design patterns and practices. This includes designing applications to be inherently scalable, utilizing cloud provider-native services, and leveraging infrastructure-as-code to automate resource provisioning and scaling.

- Regularly review and optimize: Continuously review and optimize your cloud environment for elasticity. Stay updated on new features and best practices offered by your cloud provider. Regularly assess your applications’ performance, scalability, and cost-effectiveness and adjust your elasticity strategies accordingly.

By following these steps and embracing the full potential of cloud elasticity, you can optimize resource utilization, improve scalability, and reduce unnecessary costs. Leveraging the native scaling features, refactoring applications, and adopting cloud-native practices enable you to fully exploit the elasticity benefits offered by cloud providers like Google Cloud, AWS, and Azure.

Tenth: Unlock cloud elasticity to stop paying for unused cloud capacity

Imagine you’re a homeowner already invested in a small, outdated house. Avoiding any changes or improvements can save costs because you’ve already paid for the house. However, this mindset neglects the potential benefits of moving into a modern, energy-efficient home.

Just like staying in the small, outdated house may seem cost-effective in the short term because you’ve already paid for it, it fails to consider the long-term advantages of a modern home, such as reduced energy consumption, improved functionality, and increased comfort.

In the same way, organizations may believe that working within their on-premises environments, which they have already invested in, can reduce costs. However, this approach overlooks cloud migrations’ potential cost savings, scalability, and agility.

By migrating to the cloud, it’s like moving into a modern, energy-efficient home that provides cost optimization, enhanced scalability, streamlined operations, and access to advanced services and technologies.

A common misconception is that organizations can save costs by slowing down cloud migrations and relying solely on their existing on-premises environments, which they’ve already invested in. However, maintaining on-premises data centers requires continuous operational support, similar to maintaining a garden you already have. Just like a garden requires ongoing expenses for labor, water, fertilizers, and maintenance to keep it healthy and thriving, on-premises environments demand resources for labor, utilities, leases, and licenses to ensure system stability, manage hardware refresh cycles, and address outages. Cutting costs in these areas can lead to unforeseen issues and limited scalability. On the other hand, thoughtful and targeted cloud migrations help reduce costs and position the business for rapid growth once economic conditions improve.

Organizations can prioritize migrating workloads to Google Cloud that generate value and enable critical business initiatives. For example, it’s like transplanting your carefully cultivated garden to a well-equipped greenhouse (Google Cloud) where you can leverage automated irrigation systems, optimal temperature control, and advanced fertilizers (Google Cloud’s services and resources). This ensures your garden (workloads) thrives with efficiency, scalability, and cost-effectiveness.

Similarly, organizations can focus on migrating workloads to AWS that provide significant value and support critical business initiatives. It’s like moving your garden to a state-of-the-art greenhouse (AWS) equipped with smart watering systems, automated climate control, and specialized nutrients (AWS services). This helps your garden (workloads) grow with optimal efficiency, adaptability, and cost savings.

In Azure, organizations can prioritize migrating workloads that have sizable operational overhead and can benefit from cloud efficiencies. It’s like transplanting your garden to a well-designed botanical garden (Azure) with automated sprinklers, intelligent climate control, and expert horticulturists (Azure services). This ensures your garden (workloads) thrives with streamlined operations, improved productivity, and reduced costs.

Organizations can realize cost reduction, operational efficiency, and increased scalability by carefully selecting and migrating value-generating workloads to the cloud. The analogy of maintaining a garden you already have helps illustrate the ongoing costs and limitations of on-premises environments while highlighting the benefits of strategic cloud migrations in Google Cloud, AWS, and Azure.

How to avoid it?

To avoid the misconception of thinking that reducing costs can be achieved by slowing down cloud migrations and sticking to on-premises environments, follow these steps:

- Educate yourself: Gain a comprehensive understanding of cloud migration’s benefits and potential cost advantages. Learn about the different cloud service providers, their offerings, and the scalability and cost-saving features they provide.

- Evaluate cost-saving opportunities: Conduct a thorough analysis of your current on-premises infrastructure costs and compare them to the potential cost savings cloud solutions offer. Consider hardware maintenance, software licensing, energy consumption, and scalability factors. Identify areas where cloud migration can lead to significant cost reductions.

- Perform a workload assessment: Assess your existing workloads to identify those well-suited for migration to the cloud. Look for workloads that have predictable resource requirements, can benefit from scalability, or have high infrastructure maintenance costs. Prioritize migrating these workloads first to maximize cost savings.

- Develop a migration strategy: Create a detailed plan for migrating your workloads to the cloud. Determine the most suitable cloud service provider based on your specific needs and the types of workloads you’re migrating. Develop a timeline, allocate resources, and define the migration approach (e.g., lift-and-shift, rearchitecting, or cloud-native services).

- Optimize cloud resources: Once migrated, continuously monitor and optimize your resources to ensure cost efficiency. Leverage cloud provider tools and services to monitor resource utilization, identify areas of waste or overprovisioning, and implement cost-saving measures such as rightsizing instances, utilizing reserved instances, or implementing auto-scaling.

- Embrace cloud-native practices: Take advantage of cloud-native practices and services that promote cost optimization. These include using serverless computing, containers, and automation to streamline operations and minimize resource waste. Emphasize cloud-native development practices to ensure your applications are designed for optimal cost efficiency.

- Regularly review and adjust: Continuously evaluate your cloud usage and costs to identify areas for improvement. Regularly review your cloud provider’s cost management tools and updates to stay informed about cost-saving features and best practices. Adjust your strategies and resource allocations as needed to maximize cost savings.

By following these steps, you can avoid the misconception of relying solely on on-premises environments to reduce costs. Embracing cloud migration and implementing cost optimization practices will allow you to leverage cloud solutions’ scalability, efficiency, and cost advantages.

Tenth: Not doing the basics well =/

Cloud computing offers great flexibility and scalability but challenges managing costs effectively. Cloud costs can quickly escalate and impact your bottom line without proper oversight. This is particularly crucial for SaaS companies, as cloud spending directly impacts their cost of goods sold (COGS), affecting their revenue and valuation.

To avoid falling into cost management pitfalls, it’s important to be aware of common mistakes that can hinder your efforts to control cloud costs. By identifying and rectifying these mistakes, you can optimize your cloud spending and achieve better financial outcomes.

When operating in the cloud, making the right decisions can be challenging because there’s a lot of ground to cover, especially cost. Let’s explore some common mistakes businesses make and how to avoid them, using analogies and examples in Google Cloud, AWS, and Azure.

- Not maximizing your savings plans and reservations:

- It’s like paying the full retail price for a plane ticket when you could have saved by booking in advance or using a frequent flyer program.

- It’s similar to purchasing a monthly gym membership but only using it once a week.

Google Cloud: Take advantage of Google Cloud’s committed use contracts and savings plans to secure discounted rates for long-term usage of resources like Compute Engine instances. Committing to a specific usage level can significantly reduce costs over time.

- Not exploring Spot Instances:

- It’s like purchasing a full-price product when you could have bought it at a discounted rate during a limited-time sale.

- It’s similar to renting a vacation home at regular rates instead of opting for last-minute deals or off-season prices.

AWS: Consider using AWS Spot Instances for non-critical, fault-tolerant workloads that can tolerate interruptions. Spot Instances offer significant cost savings, often up to 90% compared to on-demand instances, but with the caveat that they can be terminated with short notice if the spot price exceeds your bid.

- Making cloud savings the finance team’s responsibility:

- It’s like expecting the accounting department to make engineering decisions and optimize product development processes.

Azure: Empower your engineering team to take an active role in cost optimization. Provide them with visibility into Azure costs using tools like Azure Cost Management + Billing. With this insight, engineers can identify areas of inefficiency and make informed decisions to reduce costs while maintaining performance.

- Not converting to GP3, especially for elastic computing:

- It’s like driving a car with an outdated engine that consumes more fuel and lacks the latest fuel-saving technology.

AWS: Migrate your EC2 instances’ storage volumes from GP2 to GP3. GP3 volumes provide improved performance and cost optimization options, such as the ability to configure burstable capacity. By taking advantage of GP3, you can achieve better performance while reducing storage costs.

- Not exploring intelligent tiering for S3 buckets:

- It’s like storing all your belongings in a single storage unit, regardless of their frequency of use or value.

- It’s similar to paying the same monthly fee for a storage unit, whether you access it daily or only occasionally.

Azure: Utilize Azure Blob Storage’s lifecycle management feature to move data between different tiers based on access patterns automatically. You can optimize storage costs by intelligently tiering your S3 buckets by aligning the data’s storage class with its usage frequency and characteristics.

By avoiding these mistakes and adopting cost optimization practices tailored to your cloud platform, you can effectively manage your cloud costs, maximize savings, and achieve better financial outcomes.

As we conclude this discussion on the common errors in cost management, we hope you have gained valuable insights and a deeper understanding of organizations’ challenges in optimizing cloud costs. Navigating the complexities of cost management requires constant vigilance, strategic decision-making, and a commitment to continuous improvement.

We invite you to join us on this journey as we unravel the secrets to maximizing value, reducing waste, and unlocking the full potential of your cloud investments. Together, let’s chart a course toward cost efficiency and financial success in the ever-expanding realm of cloud computing.

Thank you for accompanying us thus far, and we look forward to sharing more insights in our upcoming articles. Stay tuned, and happy cost optimizing!

“This must be the work of an enemy’s Stand!” You can think it about Cloud Cost Management, but like this reference, it’s only a hard, hardest work *blink

See ya soon, Cloud Cost Manager “user”!

o/